By David Hatherell and Louis Rossouw

In recent years, the fields of machine learning, deep learning and advanced analytics have revolutionized various domains, including mortality modelling. These cutting-edge technologies offer tangible benefits by enhancing the accuracy, interpretability and efficiency of predictive models.

In this article, we’ll delve into the key concepts of one such technique – LASSO (Least Absolute Shrinkage and Selection Operator) regression – and explore its distinguishing benefits. We will show how LASSO regression stands out as a powerful technique that addresses common challenges associated with having multiple predictor variables.

Understanding LASSO regression

LASSO regression builds upon the principles of generalized linear regression while introducing a regularization term that enhances model performance.

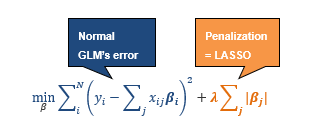

The technique aims to minimize the formula below:

where:

- yⅈ represents the response variable for the ith observation.

- χⅈj represents the jth value of the independent variable for the ith observation.

- βj represents the regression coefficient associated with the jth value of the independent variable.

- λ represents the regularisation term.

In generalized linear models (GLMs), we estimate coefficients using the ordinary least squares (OLS) method. The LASSO technique adds a penalty term to the OLS equation that’s used to minimize error. The total penalty applied is the sum of the absolute value of the coefficients scaled by some lambda. If lambda is zero, we get the OLS equation with all the variables in the model formula.

Increasing lambda forces coefficients closer to zero and results in some of these coefficients being zeroized (effectively removing the variable from the model). In this way the LASSO model identifies which variables have an influence on the actual outcome based on the data that is provided.

Cross validation

Cross validation is commonly used in a range of machine learning techniques to assess and set hyperparameters without using separate hold-out or test data. This is done to reduce the risk of overfitting to the data. Cross validation involves the following steps:

- Breaking the training data into several folds (say five).

- Fitting a model on the four folds of the data and validating on the fifth fold.

- Repeating the process until each fold has been used.

- Taking an average of the error metric calculated over all folds.

In the case of cross validated LASSO regression, cross validation is used to set the lambda parameters. This results in models that depend on the level and consistency of relationships in the data. As such, these models are less prone to overfitting.

Three benefits of cross validated LASSO regression

Variable selection

By incorporating the penalization term, LASSO jointly optimizes two critical aspects:

- Goodness of fit: Like GLMs, LASSO aims for a good fit to the data.

- Coefficient structure: It seeks a desirable structure for the estimated coefficients.

The penalty encourages some coefficients to shrink, and in some cases, even become zero. This tradeoff ensures that the model retains the most important variables while others get removed. The resulting model is simpler, easier to understand and less prone to overfitting.

Even in cases where the data has limited variables, incorporating variable interactions into the model can lead to hundreds or even thousands of unique interacted variables. LASSO can effectively handle interactions among predictor variables including handling collinearity.

One can purposefully simplify a LASSO regression by using higher lambda than that suggested by cross validation to further reduce the number of variables in the model. This may be desirable in some cases.

Weighing existing assumptions

By using a baseline such as an existing mortality table, LASSO models can incorporate existing assumptions. Using a value of lambda, set through cross validation, results in a model that balances the existing assumptions against the data. This model fits closer to the experience, where there is enough data to support it, and closer to the existing assumptions where data is limited.

Interpretability

In addition to having fewer coefficients, LASSO regression provides model output in a format similar to that of GLMs. This consistency is advantageous for actuaries who are already familiar with GLMs. By providing an interpretable formula for generating predictions, LASSO allows practitioners to understand the key drivers behind the model’s predictions, avoiding the complexity of “black box models.”

In summary

LASSO regression combines the advantages of more advanced machine learning approaches with the simplicity of GLMs. It allows one to efficiently select variables from a large pool of possibilities while balancing existing assumptions. The penalty term ensures that only relevant coefficients are included for variables with sufficient supporting data.

Essentially, LASSO regression encourages simple models where only the most important variables contribute significantly to the model output. These models have coefficients that actuaries can easily interpret and use when setting assumptions.

This article reflects the opinion of the authors and does not represent an official statement of the CIA.

References

Tibshirani, Robert. 1996. “Regression Shrinkage and Selection via the Lasso.” Journal of the Royal Statistical Society. Series B (Methodological). Vol. 58, No. (1): 267-88.